News & press releases

Boosting Big-Data Developer Productivity with PyWren and dataClay

Aim for productivity

One of the main goals of CLASS is developer productivity, even when writing complex big-data analytics applications. A CLASS developer should be able to both write and execute her analytics application easily and efficiently. For application development, this means focusing on writing business logic, and minimizing boilerplate code required for application operation.

To deliver an efficient development experience of map/reduce applications in CLASS, we combine two key components of the CLASS architecture: PyWren and dataClay. PyWren is a simple Python library used for massively scaling execution of Python functions on very large data sets. This is done either by a map operation, which executes a given function independently on each record in the data set, or via a reduce operation, which executes a given function on multiple records together. Parallel execution of the computation is handled transparently by PyWren, by dispatching the functions to execute on top of a serverless platform such as Apache OpenWhisk, IBM Cloud Functions or Knative. PyWren further uses object storage, such as IBM Cloud Object Storage or Minio, for sharing code, dependencies and results.

dataClay is a distributed storage system for Python (or Java) application objects, allowing objects to be persisted and concurrently shared across components. Each dataClay object has a persistent master copy running on a dataClay node called data service node. Each application client accessing a dataClay object can do so in one of two ways. One way is explicitly creating a local clone of the master copy, and then pushing state updates to the master copy. A second way is to transparently access the remote master copy at each method invocation. dataClay object model is created off-line prior to application execution, and is used to establish the access mechanism for each object class.

In CLASS, dataClay serves as the backbone for sharing data across the compute continuum – from the cloud to the edge. PyWren is used to perform map/reduce computation on data. In our quest to deliver an efficient and productive development experience in CLASS, we realised that we need to allow PyWren functions to access dataClay objects natively, so that developers can write PyWren map/reduce functions operating directly on dataClay objects – even when the code accessing dataClay is running in many serverless functions across a cloud, as required by PyWren. For example, as part of a CLASS obstacle detection application, we may want to run a map operation for detecting all the pedestrians around cars and detect all the possible collisions, where all cars and objects of the city persisted in dataClay.

Integration Prototype

In the prototype we describe below, we adapted PyWren running on OpenWhisk, so that PyWren client code can use dataClay object-oriented API and models. As PyWren operates by distributing the function to compute and data into OpenWhisk actions (serverless functions), the main challenge became adding support to the OpenWhisk PyWren action runtime for using dataClay APIs. This implied extending the action image with dataClay libraries, and automatically adding dataClay boilerplate code to the action code. The prototype code is available here.

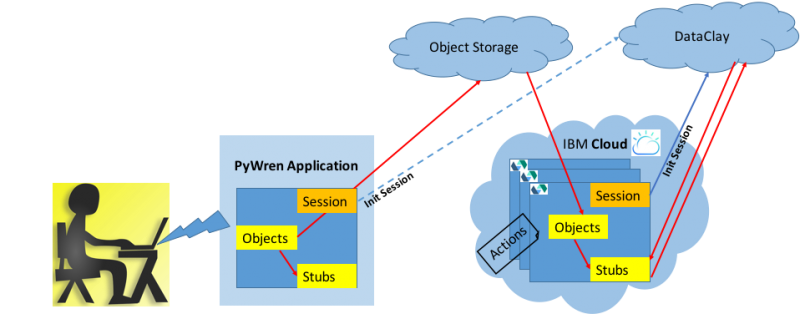

Figure 1: DataClay objects distribution and analytics with PyWren

Figure 1 shows execution of a PyWren/dataClay application using our prototype. The PyWren application obtains the object model stubs, session and container object reference, required for performing parallel computation on the many objects within the container object. When PyWren issues a parallel computation in OpenWhisk actions, these actions share the obtained data of the main application: the container object remote reference is shared through PyWren’s use of object storage. The stubs, session and connection properties are shared in the build process. Each action’s code also automatically embeds new code for initialization of its own connection to dataClay using the session and connection properties that shared in the build process. In addition, dataClay client libraries are installed in the custom action image of the prototype. As a result, each action is able to connect to dataClay by sharing the main application’s remote references and communications details.

Programming example

The following simple example shows of retrieving the speed of all cars, which are dataClay objects, grouped in a dataClay container (of type “Cars”) called “masa_cars”:

First, the application has to initialize a session with dataClay:

from dataclay.api import init, finish

init()

Then, the model needs to be imported using its dataClay namespace:

from Cars_ns.classes import Car, Cars

Then, the cars container object reference:

cars = Cars.get_by_alias('masa_cars')

The following code allows to get all the container objects ids:

iterdata = cars.get_ids().split('-')

Finally executing the map function:

pw.map(get_car_speed, iterdata)

The map function uses the model-imported objects:

def get_car_speed(x):

car = cars.get_by_id(x)

return car.speed

Conclusions

Developer productivity is key in CLASS. Integrating powerful components becomes even more valuable when the combined product can be used efficiently. In this blog we presented an example of how data distribution in dataClay and scale-out computation in PyWren can be combined while delivering a simple and efficient programming abstraction that allows accessing dataClay objects from computation distributed in serverless functions.