News & press releases

Designing a context-aware ML subsystem: SLA Predictor

Introduction

One of the main objectives of CLASS project is to provide sound real time guarantees on complex data-analytics workflows executed across the compute continuum, from edge to cloud.

At Cloud Computing Platform level, we have found that there is a limit in the scalation for some applications. At a certain point, it is more efficient to avoid scaling, and we presume that the state of the system in terms of use of resources plays an important role in the final execution time. Thus, we have devised a component to predict the optimal number of initial workers (replicas on a parallel workflow) to achieve a target execution_time on a certain state of the system.

We will describe the steps followed to implement a Machine Learning (ML) model to estimate a predicted execution time for an application based on the status of the environment by analyzing the metrics of the system that Prometheus provides.

Exploratory Data Analysis (EDA)

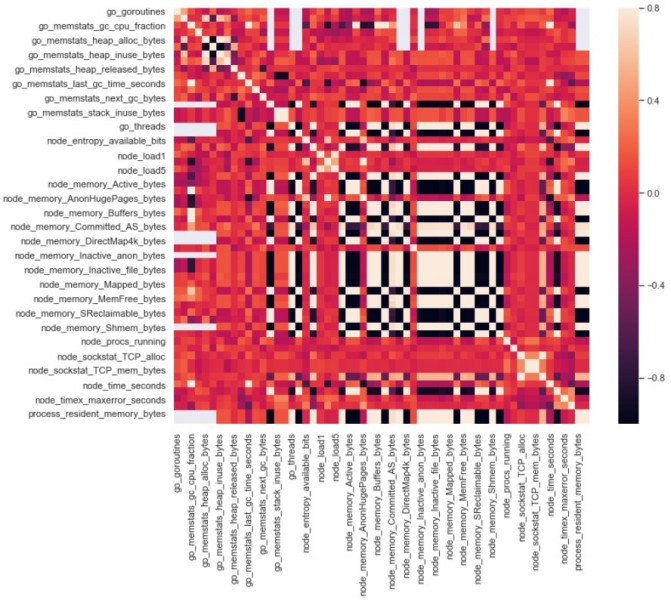

The first step is to describe our cluster based on the metrics stored in Prometheus and provided by Linux, Kubernetes and GoLang, between other sources. Initially we have 157 time series metrics. The objective is to obtain their correlation matrix, or how they depend on each other. The initial correlation matrix shows metrics that either are not correlated with any other or have empty or static values. After filtering out the irrelevant metrics, we get the final correlation matrix, made up by 57 metrics that contains useful information for our objective, and will be used to build our ML model.

Figure 1: Correlation matrix with selected metrics

Data acquisition and training data generation

The second step is to create our training set. First, we perform different executions of a test COMPS application that runs in our cluster. Then, we retrieve the values of our set of metrics (obtained in EDA) in the time where each execution took place.

The COMPS application generates information about execution time each time it is run. The executions take place at different times of the day with different number of workers trying to represent different levels of stress of the instances. Table 1 contains a sample of the first training set of 40 executions:

| Day | Hour | Execution | Time | Workers | |

| 1 | 2021/02/22 | 15:10 | T1 | 617820 | 3 |

| 10 | 2021/02/23 | 10:54 | T10 | 582628 | 3 |

| 18 | 2021/02/24 | 08:47 | T18 | 466922 | 6 |

| 27 | 2021/02/25 | 11:15 | T27 | 588759 | 1 |

| 40 | 2021/03/01 | 09:25 | T40 | 429661 | 9 |

Table 1. Executions summary sample

We have created a function to obtain the training data. It retrieves the last hour of metrics for the list of metrics extracted in the EDA section for each execution.

Model development

The critical point is the model development. We have followed different approaches to find the best model for our problem. With Neuronal Networks and Regression models, we faced the issue that the workers variable is very important to predict the execution time, but to these models this metric is irrelevant, and the same execution time is predicted whatever number or workers are set. Finally, we used a classification model. We had to change our perspective by,

1. First, we classify how stressed is our system by analysing the metric data extracted in the EDA. We categorised it into low, normal, or high.

2. And second, we obtain an estimated execution time for the sample application at each level of stress and number of workers.

The rules “stress -> workers -> execution time”, were extracted from the 40 experiments to create our training set, described in Table 2. A default upper value of high stress was set to 1000000 to represent a situation in which the execution time can be too long.

| Workers | Stress | Execution time |

| 1 | Low | (550000,580000) |

| 1 | Normal | (580001,610000) |

| 1 | High | (610001,1000000) |

| 3 | Low | (550000,570000) |

| 3 | Normal | (570001,600000) |

| 3 | High | (600001,1000000) |

| 6 | Low | (430000,450000) |

| 6 | Normal | (450001,470000) |

| 6 | High | (470001,1000000) |

| 9 | Low | (390000,410000) |

| 9 | Normal | (410001,420000) |

| 9 | High | (420001,1000000) |

Table 2. Rules definition for linking workers, stress, and execution time

As we did with the regression model, the training set has been transformed by adding the aggregated information for each execution. A benchmarking process has followed, using several classifier models on a balanced dataset, where all levels of stress were evenly represented.

Based on the result from the classifier models benchmark, the KNeighborsClassifier model has been selected to be the best model for our scenario, with an accuracy of 0.8666666666666667.

Model exposition

The SLA Predictor runs on a microservice that is accessible using REST calls.

The “predictSLA” method is called by passing the number of workers and the desired execution time as parameters. The call is done from the SLA Manager. The SLA-Predictor first runs a query to Prometheus to obtain the last hour of our selected metrics, then, analyses the result to know how stressed is the system, and replies with number of workers that better fits the input data based on the rules defined for “stress - workers - execution time”.

Some examples of this endpoint:

1. /predictSLA?workers=3&exectime=620000

o Internal result: [(1, (580001, 610000)), (3, (570001, 600000)), (6, (450001, 470000)), (9, (410001, 420000))]

o Output: 3

2. /predictSLA?workers=3&exectime=520000

o Internal result: [(6, (450001, 470000)), (9, (410001, 420000))]

o Output: 6

Conclusion

With the new component called SLA-Predictor we have reduced the time of execution of the sample application, and thus, contributed to global time guarantee constraint. In order to assess the improvement, we have conducted some experiments - their results are described in this article (SLA Predictor to provide Real-time guarantees from the CLASS Cloud Computing Platform) from our blog.