News & press releases

The road towards edge recognition

In the heart of the city of Modena, the joint efforts of the Municipality of Modena and the University of Modena and Reggio Emilia (UNIMORE) are leading to the creation of an area for autonomous driving. In this context, UNIMORE and the Municipality join efforts with BSC, IBM, Atos and Maserati within the H2020 CLASS European project, led by BSC.

During 2017, the Modena Automotive Smart Area (MASA) program was activated with UNIMORE, the MIT (Italian Ministry of Transportation) and numerous companies in the automotive and ICT world, as well as typical companies in the mechanical sector.

Thanks to the European Project CLASS, the Municipality of Modena is the first to install an IOT Sensor Network for the analysis of real-time data on mobility and on parameters for monitoring the quality of life in urban areas, next to the local agencies' control units. The research and testing activities concern interactions between vehicles, interactions between a vehicle and moving obstacle, and interactions between a vehicle and the city. MASA is a case study, unique in urban areas, in the report prepared by the National Technical Committee B.1, established within the framework of the AIPCR (World Road Association).

In the MASA, the Municipality of Modena will install traditional cameras (already present) and intelligent cameras by the end of the first half of 2019, to guarantee a constant and complete monitoring of the area. Data will be generated and collected continuously from IOT devices and sensors located in the MASA and on cars with high-tech equipment, as numeric anonymous meta-data.

The project will deploy a fog computing network spanning a wide urban area, collecting a wide amount of data in real-time, to be elaborated and used for semi-autonomous driving applications. The real-time elaboration of data coming from hundreds of cameras will be ensured by means of a distributed edge computing system composed of high-performance embedded devices featuring highly-parallel computing engines.

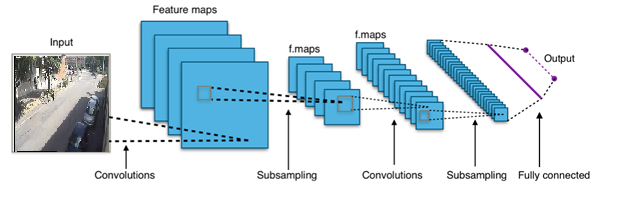

To make this dream come true, the first step is to find a way to identify the objects on camera images and to understand how they are positioned in space. This is done thanks to the Neural Networks (NN) and Deep Learning, a branch of Artificial Intelligence (AI) that deals with multilayer perceptrons, systems composed by many stacked layers ("deep" models) of basic artificial neurons. Multilayer perceptrons are bio-inspired statistical models capable of approximating complex functions by mapping a set of input/output pairs sampled from their environment; backpropagation and Stochastic Gradient Descent (SGD) represent the numerical optimization foundations of the learning capability of NNs.

The model of the Convolutional Neural Networks (CNN) for object detection that will be used as baseline for further advancement during the project is the YOLO-family [1], the state of the art for object detection via CNN. Almost all detection systems apply its model to an image at multiple locations and scales, and then return the computed bounding boxes that achieve the maximum scores. YOLO uses a totally different approach: it first applies a single neural network to the full input image, and secondly divides the image into smaller regions and predicts bounding boxes and classes for each region. After that, it computes weights for all the regions, and finally returns only the bounding boxes which exceed a fixed threshold. The YOLO full model requires a lot of memory and computations, and therefore the authors proposed two different and reduced versions of the original model called YOLO-Small and YOLO-Tiny, which are more suitable for our needs, that is to use embedded platforms with low energy consumption.

The smart cameras in the city will be equipped with the embedded Tegra X2 module [2] [3], which represents the state of the art regarding commercially available GP-GPU for the embedded domain.

At this point, the dream of building a safer city, with an intelligent and conscious traffic management in Modena is almost reality thanks to the CLASS project.

[1] J. Redmon and A. Farhadi, "Yolo9000: Better, faster, stronger.," arXiv preprint arXiv:1612.08242, 2016.

[2] NVIDIA, "Jetson TX2 Delivers Twice the Intelligence on the Edge," 2018. [Online].

[3] NVIDIA, "Jetson TX2 Data Sheet," 2018. [Online]. Available: https://www.docdroid.net/yGXIxZu/data-sheet-nvidia-jetson-tx2-system-on-module.pdf.