News & press releases

An efficient distribution of big-data analytics workloads across the compute continuum

Current trends towards the use of big data technologies in the context of smart cities suggest the need of novel software development ecosystems upon which advanced mobility functionalities can be developed. These new ecosystems must have the capability of collecting and processing vast amount of geographically-distributed data, and transform it into valuable knowledge for public sector, private companies and citizens. CLASS is addressing this need by developing a software architecture framework capable of efficiently exploiting the computing capabilities of the compute continuum, from edge to cloud, while providing real-time guarantees on the response times of the big-data analytics processes.

COMPSs is at the heart of the big-data software architecture: it is the component responsible for efficiently distributing the different data analytics methods described as a single workflow across the compute continuum. To that end, we develop new scheduling strategies that rely on the timing characterization of the data analytics workflows and the devices available across the compute continuum.

Workflow and compute continuum models

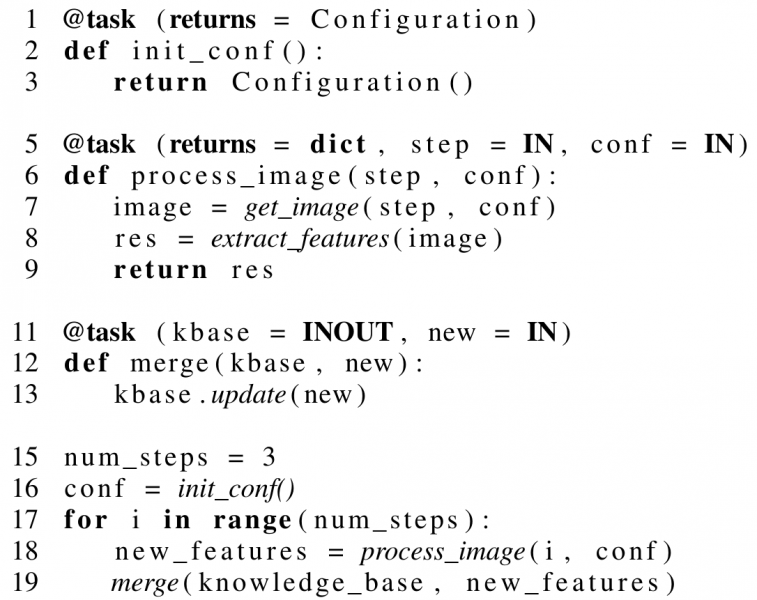

Figure 1. Example of a Python application annotated with COMPSs decorators.

COMPSs provides a simple, yet powerful, tasking programming model, in which the programmer identifies the data analytics functions, named COMPSs tasks, that can be distributed and (potentially) executed as asynchronous parallel operations, as well as identifying the data dependencies existing among them. The COMPSs runtime is then in charge of selecting the most suitable computing resources in which tasks can execute, while managing the data dependencies, and so data transfers, existing among them.

Figure 1 shows an example of a COMPSs data analytics workflow in which different features from an image are extracted and merged into a common knowledge base. COMPSs tasks, corresponding to the different analytics methods, are identified with a standard Python decorator @task at lines 1, 5, and 11. The @task decorator can include a returns argument that specifies the data type of the value returned by the function or method (if any). Moreover, the directionality of the data arguments if the function can be defined as IN, OUT or INOUT. This information defines the data dependencies between COMPSs tasks and describes the data analytics workflow.

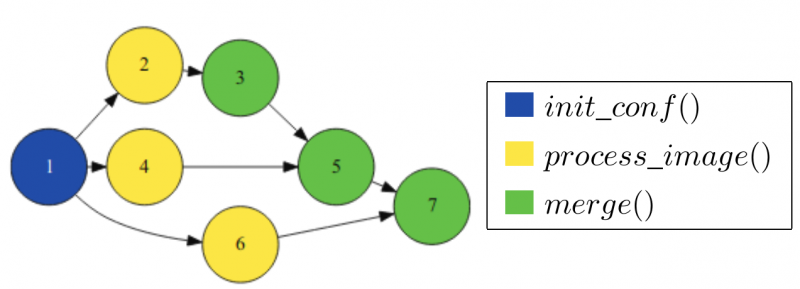

Interestingly, the execution of a COMPSs workflow can be represented and characterized as a Direct Acyclic Graph, where nodes are COMPSs tasks and edges are data dependencies among them. Figure 2 shows the DAG representation of the COMPSs workflow presented in Figure 1.

Figure 2. DAG representation of the COMPSs application presented in Figure 1.

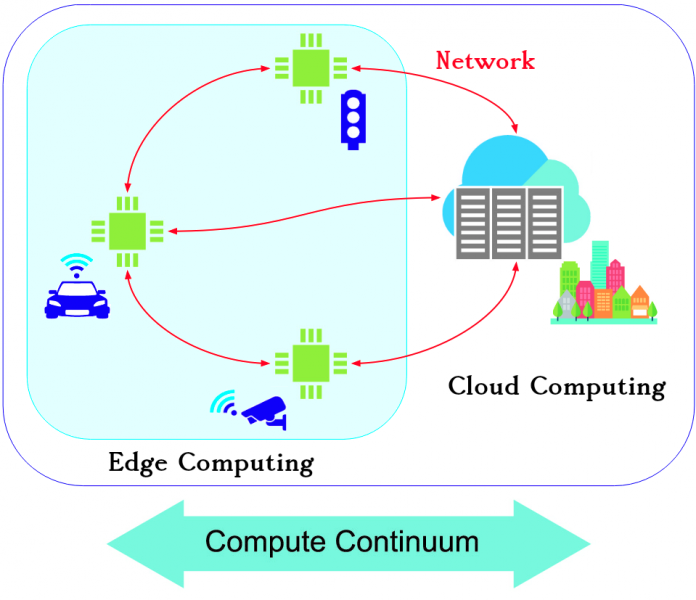

We also characterize the computation and network communication capabilities of the compute continuum composed of connected edge and cloud resources. As an example, Figure 3 shows a compute continuum model composed of four computing resources: three edge resources attached to a car, a traffic light and a smart camera, and one cloud resource. The communication links between computing resources, e.g., optical fiber, 4G/5G, are represented as red arrows.

Figure 3. Digraph representation of an example of compute continuum.

Upon this system representation, we develop a novel allocation strategy inspired by real-time scheduling techniques, that evaluates which edge/cloud computing resource is more suitable to execute each distributed COMPSs task based on several factors, including the timing characterization of the tasks, the processed data sources and dependencies, and the performance capabilities of the resources. The objective is to guarantee and minimize the end-to-end response time upper bound of the data analytics workflow.